Deep Learning

NEC HPC platform for Deep Learning

The last few years have seen a growing interest in Deep Learning techniques, thanks to the major break-throughs achieved in the fields of computer vision and natural language processing. These successes have encouraged researchers to explore many areas of application for such techniques. For example, even large computation tasks generally belonging to the high-performance computing domain, such as fluid mechanics, biology and astrophysics, may benefit from the application of Deep Learning to improve quality of simulations and increase computation performance.

At the core of Deep Learning, there is a set of algorithms called artificial neural networks (ANN), and their quick evolution has been propelled by the availability of a number of software frameworks that simplify development. NEC has been a pioneer in developing such frameworks, contributing to one of the first instances of such software: Torch. Currently, a number of alternatives have emerged, with PyTorch, TensorFlow, MXNet, CNTK being among the most popular deep learning frameworks.

Conceptually, these frameworks provide a ready-to-use set of neural network building blocks, and a high-level API to implement the neural network architecture. Writing a new neural network is as simple as sticking together a few lines of code using the python programming language. The frameworks will then take care of all the operations required to enable the training of the neural network and its execution. Given the high-level API, the user of the framework can ignore the low-level hardware details, focusing on the neural network algorithm design. However, the high-level API abstractions may also affect the overall efficiency of the implemented low-level computations. For instance, the high-level APIs generally expose neural network layers as atomic components of a neural network, requiring their execution to happen serially on the underlying hardware.

NEC Deep Learning Platform

Building on its large experience in both AI applications and platforms, NEC designed a next generation deep learning platform aiming at portability, extendibility, usability and efficiency. The NEC’s deep learning platform (DLP) seamlessly integrates into popular frameworks, rather than introducing “yet another API”. As such, in contrast to other techniques, it does not replace any of the original functionality of the original deep learning frameworks but complements them.

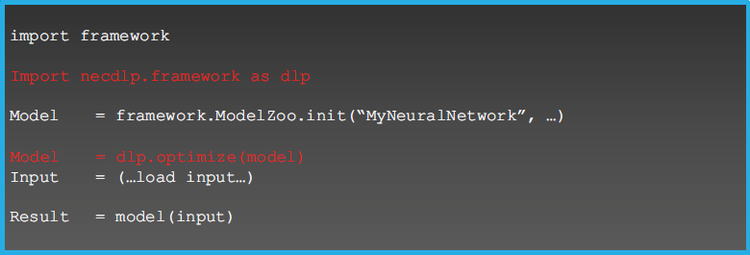

Users continue writing neural networks using their favorite frameworks, e.g. TensorFlow or PyTorch. The NEC platform seamlessly analyses the neural network descriptions to provide additional hardware compatibility and improve performance. This step leverages the expertise of NEC in automated code generation techniques, allowing the system to create on the fly a computation library that is optimized specifically for the user’s neural network. The generated code is compiled in implementations that are functionally equivalent for the user, performing the exact same set of computations on the data, but more efficiently. A code snippet that uses the NEC DLP looks as follows.

NEC Deep Learning Platform: A code snippet

Two lines of code is everything that the user has to add to her deep learning code, in order to use NEC DLP and benefit from its optimizations. From an architectural perspective, NEC DLP is shown in the following figure.

NEC Deep Learning Platform Architecture

A Peek into the Optimization Techniques

Deep Learning frameworks, like TensorFlow and PyTorch, execute their neural networks on a layer-by-layer basis, which can result in an inefficient use of the hardware executor’s memory hierarchy and thereby in long execution times. NEC DLP addresses the problem by generating a new custom computation graph customized for the neural network at hand. The NEC platform: 1) analyzes the neural network structure to extract its computation graph; 2) then it performs transformation on this graph to generate more efficient computations taking into account the structure of the graph itself; 3) finally it generates computation libraries that are specific to the newly designed computation graph and to the target hardware. NEC DLP comes with a large set of optimization patters that can modify the network’s structure, improve the reuse of data buffers, optimized the use of the device’s memory hierarchy and computation units.

Neural Network Deployment

When a neural network model has been trained, it is supposed to be integrated into an application. Since models are developed using a Deep Learning framework, running the developed neural network requires shipping the entire framework together with the application. This is often too inefficient, in particular for some deployments where performance is critical. As such, often a developed neural network goes through expensive engineering efforts to port it into a stand-alone implementation that can be run independently from the original framework.

NEC DLP addresses this problem by supporting the deployment of the neural network models for any hardware supported by the framework. When required, NEC DLP can generate a software library that implements the neural network for the selected target device, e.g. NEC SX-Aurora TSUBASA. The deployment function relies on the same optimization engine and architecture of NEC DLP. The generated libraries only contain the neural network execution functions, parameters and a minimal set of helper functions, which significantly reduces the size of these libraries and simplifies their integration in the applications that need them.