Docker support on SX-Aurora TSUBASA

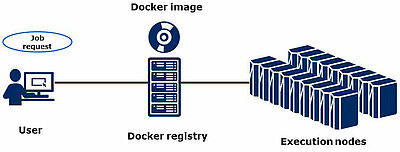

SX-Aurora TSUBASA supports Docker as a container environment. User can deploy their applications easily to execution nodes using Docker. When a user submits a job, they specify a Docker image which is registered in the Docker registry. On the following pages, the usage of the Docker environment is described briefly from the view of administrators and users.

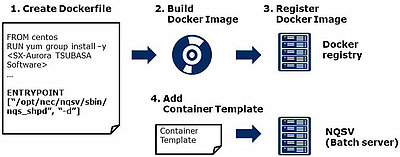

This page shows the flow about how the administrator prepares the Docker images. At first, the administrator creates a Docker file and builds the image including the environment for each application. Then the administrator registers it to the Docker registry. Besides that the administrator adds the Container Template for the image to NQSV, so that the user can refer to it.

Container Template

- Information of an OS image and resources of a provisioning environment with Docker

- Template name

- Image name

- Number of CPUs

- Memory size

- Number of GPUs

- Administrator uses qmgr(1) to register a template to NQSV

| create container_template=<template_name> image=<image> cpu=<cpunum> mems z=<memory_size> [gpu=<gpunum>] [boot_timeout=<timeout>] [stop_timeout=<timeout>] [custom=”<custom_define>”] [comment=”<comment>”] |

The Container Template includes information about the image and resources like number of CPUs and memory size required for the environment. The administrator registers the template to NQSV using the qmgr(1) command.

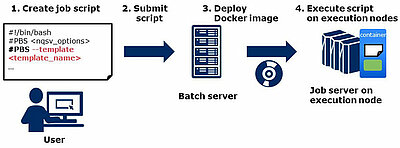

This page shows the flow about how user runs the application in containers as a job. The user can specify the Docker image using the "—template" option in a Job Script. Once the user submits the job, the Docker image specified in the script is deployed into the execution nodes by batch server and the application runs in the container on them.

Technical Details about VE Process Isolation

How VE Processes are isolated in each container?

- VEOS utilizes Linux namespaces to isolate each container

- PID, UID, GID, mount points, IPC, network resources

- System call request is executed as corresponding pseudo process (ve_exec)

- For example, getpid()

- Getpid() request is offloaded

- Linux returns namespace PID

There might be a technical question, "how VE processes are isolated in each container?" Real VE processes are running on VE, but they are isolated in view of each container on VH.

The answer is "VEOS utilizes Linux namespaces for isolation". A system call request from VE process is offloaded to the corresponding pseudo process (ve_exec) in each container, so PID, UID, GID, etc. are isolated in each namespace bound to it.

Supposed the case that the VE process1 which belongs to the container1 calls getpid(). The system call request is offloaded to the corresponding pseudo process1 in the container1. Linux returns the PID in the namespace1 to the pseudo process1. Therefore, the VE process1 receives the result as a process in container1.

Singularity is supported at the end of December, 2020!!

Upcoming Functionality

SX-Aurora TSUBASA supports Singularity as a new container environment at the end of December 2020. As you know, it is widely used especially in HPC area.

- Intel and Xeon are trademarks of Intel Corporation in the U.S. and/or other countries.

- NVIDIA and Tesla are trademarks and/or registered trademarks of NVIDIA Corporation in the U.S. and other countries.

- Linux is a trademark or a registered trademark of Linus Torvalds in the U.S. and other countries.

- Proper nouns such as product names are registered trademarks or trademarks of individual manufacturers.